library(broom)

library(tidyverse)

library(pROC)

library(lmtest)

library(HDSinRdata)

data(nyts)14 Logistic Regression

This chapter builds on the previous chapter and continues with regression analysis in R. Specifically, we cover binary logistic regression using the glm() function, which can be used to fit generalized linear models. Many of the functions learned in the last chapter can also be used with a glm object. For example, the glm() function expects a formula in the same way as the lm() function. We also cover diagnostic plots and model evaluation specific to a binary outcome.

The data used in this chapter is from the 2021 National Youth Tobacco Survey (NYTS) (Centers for Disease Control and Prevention (CDC) 2021). This dataset contains 20,413 participants and a set of variables relating to demographic information, frequency of tobacco use, and methods of obtaining said tobacco as reported by students on the 2021 NYTS. We use logistic regression to examine whether survey setting is associated with youth reporting of current tobacco use, similar to the analysis presented in Park-Lee et al. (2023). Note that we ignore survey weights for this analysis.

We use the broom package again to present the estimated coefficients, the tidyverse package to create a calibration plot, the lmtest (Hothorn et al. 2022) package to perform likelihood ratio tests, and the pROC package (Robin et al. 2023) to create receiver operating characteristic curves.

14.1 Generalized Linear Models in R

The glm(formula, data, family) function in R is used to fit generalized linear models. The three main arguments we must specify to the function are:

formula- the relationship between the independent variables and the outcome of interest,

data- the dataset used to train the model, and

family- a description of the error distribution and link function to be used in the model.

In binary logistic regression, we assume a binomial outcome and use the logit link function. We can specify this by setting family = binomial. By default, this assumes the link function is the logit function. Note that we can even use the glm() function to implement linear regression by setting family = gaussian. Using our example from Chapter 13, running glm(SBP ~ LEAD, data = nhanes_df, family = gaussian) would be equivalent to lm(SBP ~ LEAD, data = nhanes_df).

Our outcome of interest is current e-cigarette use, e_cig_use, so we need to create this variable from the variables that are currently in the data. We set e_cig_use to 0 if the respondent answered that they have not used e-cigarettes in the last 30 days and 1 otherwise. We can see that there are only 1,435 respondents who reported e-cigarette use. This is a low percentage of the overall sample, which will likely impact our results.

nyts$e_cig_use <- as.factor(ifelse(nyts$num_e_cigs == 0, "0", "1"))

table(nyts$e_cig_use)

#>

#> 0 1

#> 18683 1435Looking at the covariate of interest, survey setting, we can see that there are 85 respondents who took the survey in “Some other place”. Since we are interested in the impact of taking the survey at school compared to other settings, we simplify this variable to have two levels: “school” and “home/other”.

table(nyts$location)

#>

#> At home (virtual learning) In a school building/classroom

#> 8738 10737

#> Some other place

#> 85

nyts$location <- ifelse(nyts$location ==

"In a school building/classroom",

"school", "home/other")

nyts$location <- as.factor(nyts$location)To start, we create a model to predict e-cigarette use from school setting adjusting for the covariates sex, school level, race, and ethnicity. Note that we specify our formula and data as with the lm() function. We then use the summary() function again to print a summary of this fitted model. The output is slightly different from an lm object. We can see the null and residual deviances are reported along with the AIC. Adding transformations and interactions is equivalent to that in the lm() function and is not demonstrated in this chapter.

mod_start <- glm(e_cig_use ~ grade + sex + race_and_ethnicity +

location, data = nyts, family = binomial)

summary(mod_start)

#>

#> Call:

#> glm(formula = e_cig_use ~ grade + sex + race_and_ethnicity +

#> location, family = binomial, data = nyts)

#>

#> Coefficients:

#> Estimate Std. Error z value

#> (Intercept) -4.6017 0.1539 -29.91

#> grade7th 0.4461 0.1753 2.54

#> grade8th 0.9677 0.1607 6.02

#> grade9th 1.3830 0.1549 8.93

#> grade10th 1.9183 0.1513 12.68

#> grade11th 2.1385 0.1491 14.34

#> grade12th 2.4286 0.1492 16.28

#> gradeUngraded or Other Grade 2.5213 0.4487 5.62

#> sexFemale 0.1922 0.0580 3.32

#> race_and_ethnicitynon-Hispanic Black -0.6614 0.1121 -5.90

#> race_and_ethnicitynon-Hispanic other race -0.1021 0.1515 -0.67

#> race_and_ethnicitynon-Hispanic White 0.1983 0.0739 2.68

#> locationschool 0.7223 0.0648 11.14

#> Pr(>|z|)

#> (Intercept) < 2e-16 ***

#> grade7th 0.01095 *

#> grade8th 1.7e-09 ***

#> grade9th < 2e-16 ***

#> grade10th < 2e-16 ***

#> grade11th < 2e-16 ***

#> grade12th < 2e-16 ***

#> gradeUngraded or Other Grade 1.9e-08 ***

#> sexFemale 0.00091 ***

#> race_and_ethnicitynon-Hispanic Black 3.6e-09 ***

#> race_and_ethnicitynon-Hispanic other race 0.50061

#> race_and_ethnicitynon-Hispanic White 0.00726 **

#> locationschool < 2e-16 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> (Dispersion parameter for binomial family taken to be 1)

#>

#> Null deviance: 9754.9 on 18746 degrees of freedom

#> Residual deviance: 8886.8 on 18734 degrees of freedom

#> (1666 observations deleted due to missingness)

#> AIC: 8913

#>

#> Number of Fisher Scoring iterations: 6We can use the tidy() function from the broom package to display the estimated coefficients from the previous model. This time we add the exponentiate = TRUE argument to exponentiate our coefficients so we can interpret them as estimated change in odds rather than log odds. For example, we can see that those who answered the survey at school have double the estimated odds of reporting e-cigarette use compared to those who took the survey at home/other, adjusting for grade, sex, race, and ethnicity.

tidy(mod_start, exponentiate = TRUE)

#> # A tibble: 13 × 5

#> term estimate std.error statistic p.value

#> <chr> <dbl> <dbl> <dbl> <dbl>

#> 1 (Intercept) 0.0100 0.154 -29.9 1.68e-196

#> 2 grade7th 1.56 0.175 2.54 1.10e- 2

#> 3 grade8th 2.63 0.161 6.02 1.73e- 9

#> 4 grade9th 3.99 0.155 8.93 4.41e- 19

#> 5 grade10th 6.81 0.151 12.7 7.94e- 37

#> # ℹ 8 more rows14.1.1 Practice Question

Fit a logistic regression model with cigarette use as the outcome and age, race_and_ethnicity, LGBT, and family_affluence as well as an interaction between family_affluence and race_and_ethnicity as independent variables. Your AIC should be 2430.8.

# Insert your solution here:14.2 Residuals, Discrimination, and Calibration

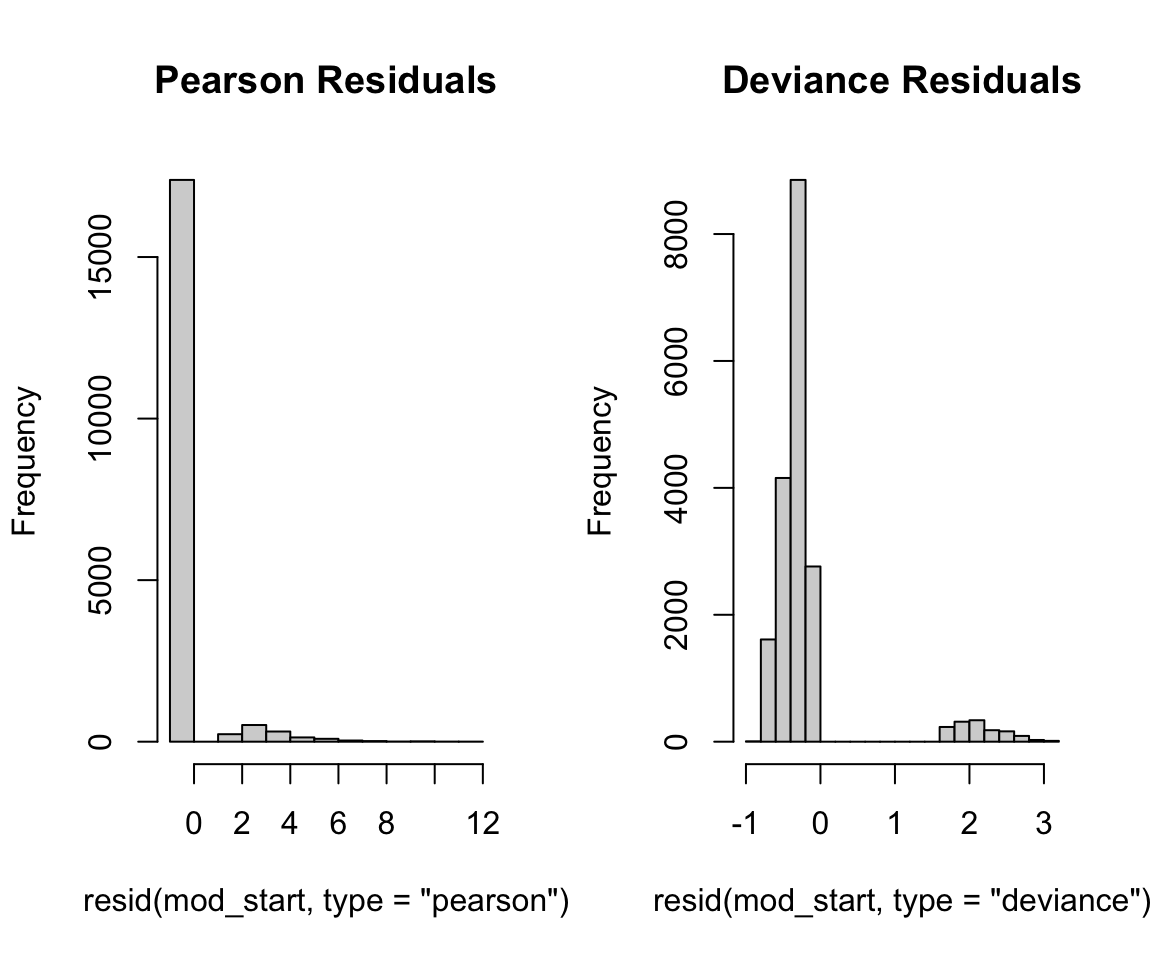

Next, we look at the distribution of the residuals. The resid() function can be used to find the residuals again, but this time we might want to specify the Pearson and deviance residuals by specifying the type argument. We plot histograms for both of these residual types using the following code. In both plots, we can observe a multi-modal distribution, which reflects the binary nature of our outcome.

par(mfrow=c(1,2))

hist(resid(mod_start, type = "pearson"), main = "Pearson Residuals")

hist(resid(mod_start, type = "deviance"), main = "Deviance Residuals")

To further evaluate the fit of our model, we may want to observe the predicted probabilities. The predict() function by default returns the predicted value on the scale of the linear predictors. In this case, that is the predicted log odds. If we want to find the predicted probabilities, we can update the argument by specifying type="response". Additionally, we can predict on data not used to train the model by using the argument newdata. Note that there are only 18,747 predicted probabilities despite our training data having more observations. This is because the glm() function (and lm() function) drop any observations with NA values when training. In the last chapter, we omitted incomplete cases prior to analysis so that the predicted probabilities corresponded directly to the rows in our data.

pred_probs <- predict(mod_start, type = "response")

length(pred_probs)

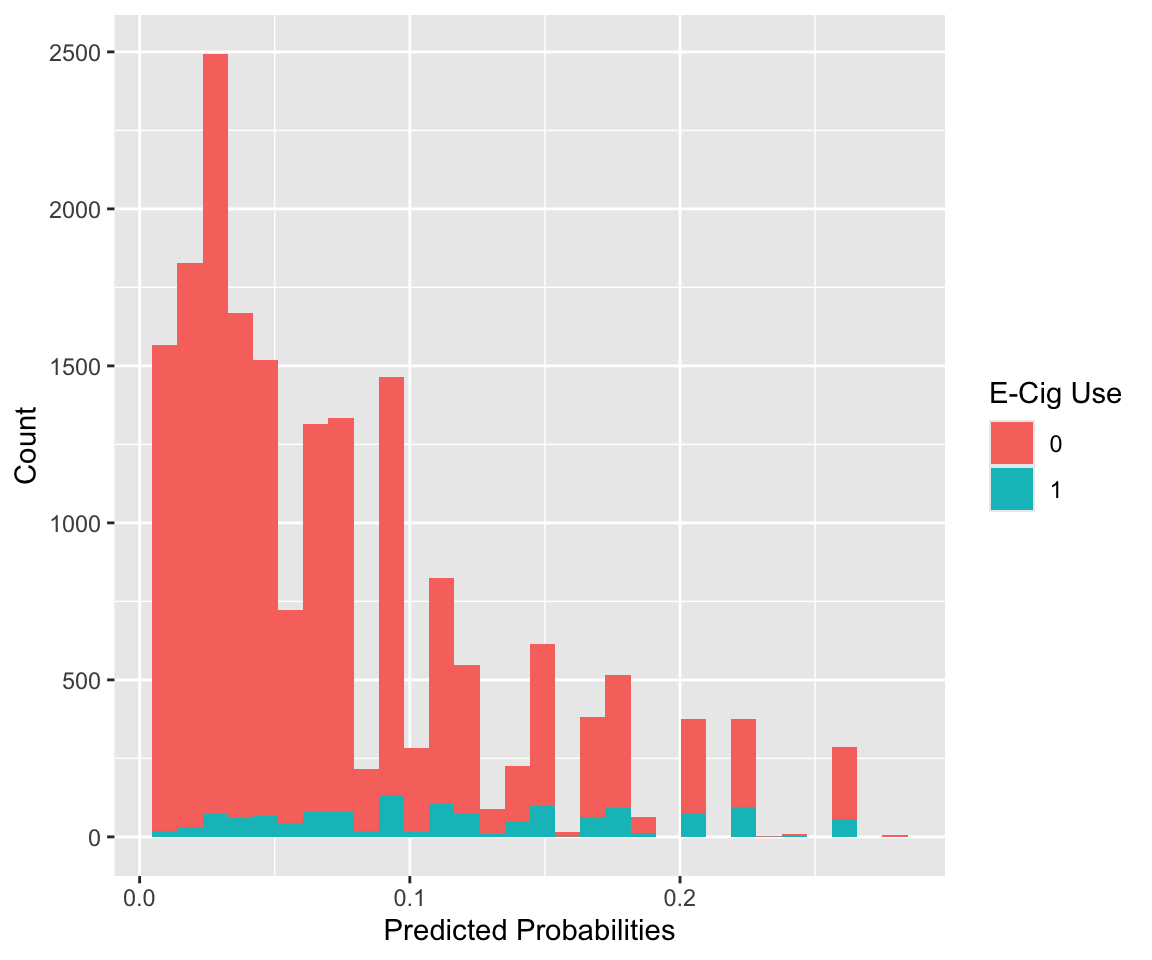

#> [1] 18747If we want to find the class for each observation used in fitting the model, we can use the model’s output, which stores the model matrix x and the outcome vector y. We plot the distribution of estimated probabilities for each class. Note that all the predicted probabilities are below 0.5, the typical cut-off for prediction. This is in part due to the fact that we have such an imbalanced outcome.

ggplot() +

geom_histogram(aes(x = pred_probs, fill = as.factor(mod_start$y)),

bins = 30) +

scale_fill_discrete(name = "E-Cig Use") +

labs(x = "Predicted Probabilities", y = "Count")

14.2.1 Receiver Operating Characteristic Curve

We now plot the receiver operating characteristic (ROC) curve and compute the area under the curve (AUC). The roc() function from the pROC package builds a ROC curve. The function has several ways to specify a response and predictor. For example, we can specify the response vector response and predictor vector predictor. By default, with a 0/1 outcome, the roc() function assumes class 0 is controls and class 1 is cases. We can also specify this in the levels argument to set the value of the response for controls and cases, respectively. Additionally, the function assumes the predictor vector specifies predicted probabilities for the class 1. We can change the argument direction = ">" if the opposite is true. We can plot the ROC curve by calling the plot() function. We can add some extra information by adding the AUC (print.auc = TRUE) and the threshold that maximizes sensitivity + specificity (print.thres = TRUE).

roc_mod <- roc(predictor = pred_probs,

response = as.factor(mod_start$y),

levels = c(0,1), direction = "<")

plot(roc_mod, print.auc = TRUE, print.thres = TRUE)

If we want to understand more about the curve, we can use the coords() function to find the coordinates for each threshold used to create the curve. The argument x= "all" specifies that we want to find all thresholds, but we could also specify only to return local maxima.

roc_vals <- coords(roc = roc_mod, x = "all")

head(roc_vals)

#> threshold specificity sensitivity

#> 1 -Inf 0.00000 1.000

#> 2 0.00569 0.00523 1.000

#> 3 0.00713 0.01070 1.000

#> 4 0.00850 0.01547 0.999

#> 5 0.00934 0.01835 0.998

#> 6 0.00982 0.02404 0.996For example, we could use this information to find the highest threshold with a corresponding sensitivity above 0.75. This returns a threshold of 0.062. If we were to predict class 1 for all observations with a predicted probability above 0.062, then we would achieve a sensitivity of 0.77 and specificity of 0.56 on the training data.

roc_vals[roc_vals$sensitivity > 0.75, ] %>% tail(n = 1)

#> threshold specificity sensitivity

#> 63 0.062 0.555 0.768We use the threshold of 0.080 indicated on our ROC curve to create predicted classes for our response. By comparing the result to our outcome using the table() function, we can directly calculate measures like sensitivity, specificity, positive and negative predictive values, and overall accuracy.

pred_ys <- ifelse(pred_probs > 0.08, 1, 0)

tab_outcome <- table(mod_start$y, pred_ys)

tab_outcome

#> pred_ys

#> 0 1

#> 0 11992 5395

#> 1 455 905sens <- tab_outcome[2, 2]/(tab_outcome[2, 1]+tab_outcome[2, 2])

spec <- tab_outcome[1, 1]/(tab_outcome[1, 1]+tab_outcome[1, 2])

ppv <- tab_outcome[2, 2]/(tab_outcome[1, 2]+tab_outcome[2, 2])

npv <- tab_outcome[1, 1]/(tab_outcome[1, 1]+tab_outcome[2, 1])

acc <- (tab_outcome[1, 1]+tab_outcome[2, 2])/sum(tab_outcome)data.frame(Measures = c("Sens", "Spec", "PPV", "NPV", "Acc"),

Values = round(c(sens, spec, ppv, npv, acc),3))

#> Measures Values

#> 1 Sens 0.665

#> 2 Spec 0.690

#> 3 PPV 0.144

#> 4 NPV 0.963

#> 5 Acc 0.68814.2.2 Calibration Plot

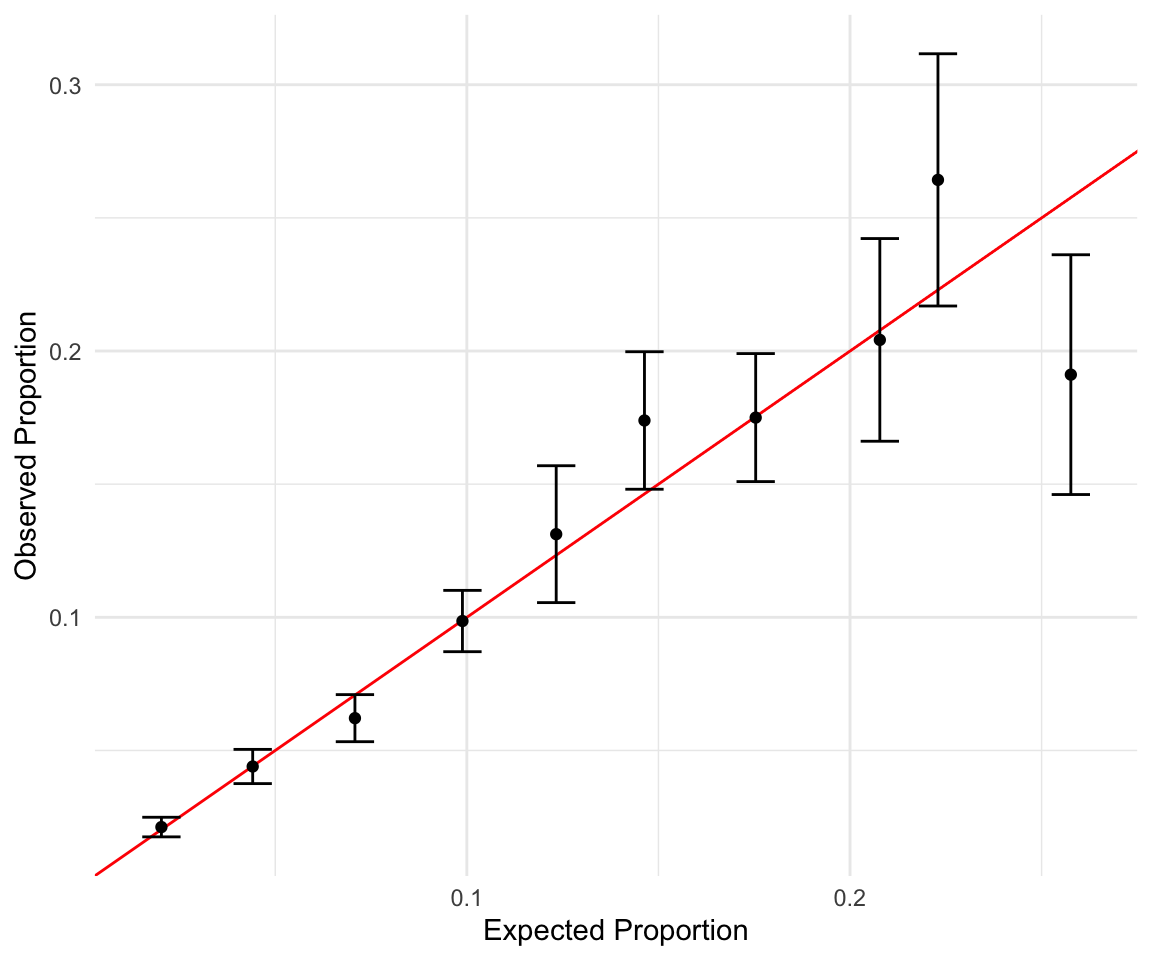

Another useful plot is a calibration plot. This type of plot groups the data by the estimated probabilities and compares the mean probability with the observed proportion of observations in class 1. It visualizes how close our estimated distribution and true distribution are to each other. There are several packages that can create calibration plots, but we demonstrate how to do this using the ggplot2 package. First, we create a data frame with the predicted probabilities and the outcome variable. Additionally, we group this data into num_cuts groups based on the predicted probabilities using the cut() function. Within each group, we find the model’s predicted mean along with the observed proportion and estimated standard errors.

num_cuts <- 10

calib_data <- data.frame(prob = pred_probs,

bin = cut(pred_probs, breaks = num_cuts),

class = mod_start$y)

calib_data <- calib_data %>%

group_by(bin) %>%

summarize(observed = sum(class)/n(),

expected = sum(prob)/n(),

se = sqrt(observed * (1-observed) / n()))

calib_data

#> # A tibble: 10 × 4

#> bin observed expected se

#> <fct> <dbl> <dbl> <dbl>

#> 1 (0.00488,0.0322] 0.0212 0.0203 0.00188

#> 2 (0.0322,0.0592] 0.0440 0.0441 0.00328

#> 3 (0.0592,0.0862] 0.0621 0.0708 0.00451

#> 4 (0.0862,0.113] 0.0986 0.0988 0.00587

#> 5 (0.113,0.14] 0.131 0.123 0.0131

#> # ℹ 5 more rowsNext, we plot the observed vs. expected proportions. We also used the estimated standard error to create corresponding 95% confidence intervals. The red line indicates a perfect fit where our estimated and true distributions match. Overall, the plot shows that our model could be better calibrated.

ggplot(calib_data) +

geom_abline(intercept = 0, slope = 1, color = "red") +

geom_errorbar(aes(x = expected, ymin = observed - 1.96 * se,

ymax = observed + 1.96 * se),

colour="black", width=.01)+

geom_point(aes(x = expected, y = observed)) +

labs(x = "Expected Proportion", y = "Observed Proportion") +

theme_minimal()

14.2.3 Practice Question

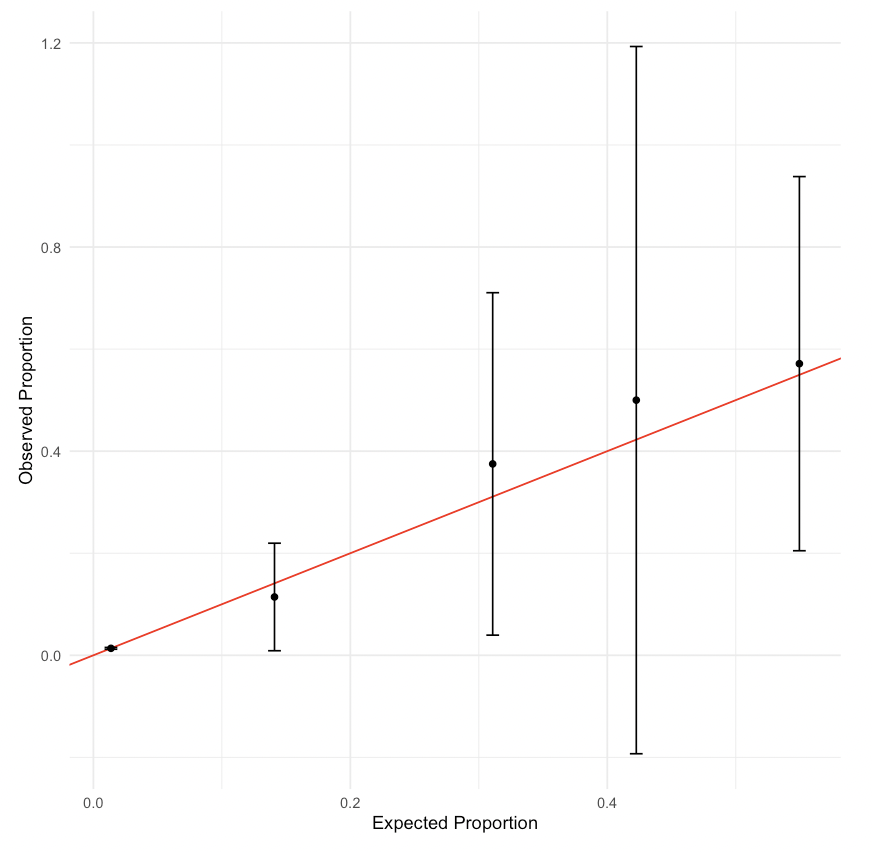

Create a calibration plot with five cuts for your model from the previous practice question (recall that this model should have cigarette use as the outcome and age, race_and_ethnicity, LGBT, and family_affluence as well as an interaction between family_affluence and race_and_ethnicity as independent variables). It should look like Figure 14.1.

# Insert your solution here:14.3 Variable Selection and Likelihood Ratio Tests

In the last chapter, we introduced the step() function to implement stepwise variable selection. This function also works with glm objects. In this case, we use this function to implement backward selection from a larger set of covariates. We first remove any observations with NA values to ensure that our training data does not change size as the formula changes.

nyts_sub <- nyts %>%

dplyr::select(location, sex, grade, otherlang, grades_in_past_year,

perceived_e_cig_use, race_and_ethnicity, LGBT,

psych_distress, family_affluence, e_cig_use) %>%

na.omit()

head(nyts_sub)

#> # A tibble: 6 × 11

#> location sex grade otherlang grades_in_past_year perceived_e_cig_use

#> <fct> <fct> <fct> <fct> <fct> <dbl>

#> 1 school Male 6th No Mostly A's 0

#> 2 school Fema… 6th No Mostly A's 0

#> 3 school Fema… 6th No Mostly C's 0

#> 4 school Fema… 6th No Mostly A's 0

#> 5 school Fema… 6th No Mostly B's 0

#> 6 school Male 6th No Not Sure 0

#> # ℹ 5 more variables: race_and_ethnicity <chr>, LGBT <chr>,

#> # psych_distress <chr>, family_affluence <chr>, e_cig_use <fct>To implement backward selection, we first create a model with all the covariates included. The period . in the formula indicates that we want to include all variables. Next, we use the step() function. Since we are using backward selection, we only need to specify the lower formula in the scope.

model_full <- glm(e_cig_use ~ ., data = nyts_sub, family = binomial)

mod_step <- step(model_full, direction = 'backward',

scope = list(lower = "e_cig_use ~ sex + grade +

race_and_ethnicity + location"))

#> Start: AIC=6093

#> e_cig_use ~ location + sex + grade + otherlang + grades_in_past_year +

#> perceived_e_cig_use + race_and_ethnicity + LGBT + psych_distress +

#> family_affluence

#>

#> Df Deviance AIC

#> - family_affluence 2 6038 6090

#> <none> 6037 6093

#> - otherlang 1 6042 6096

#> - LGBT 2 6051 6103

#> - psych_distress 3 6106 6156

#> - grades_in_past_year 6 6126 6170

#> - perceived_e_cig_use 1 6416 6470

#>

#> Step: AIC=6090

#> e_cig_use ~ location + sex + grade + otherlang + grades_in_past_year +

#> perceived_e_cig_use + race_and_ethnicity + LGBT + psych_distress

#>

#> Df Deviance AIC

#> <none> 6038 6090

#> - otherlang 1 6043 6093

#> - LGBT 2 6052 6100

#> - psych_distress 3 6106 6152

#> - grades_in_past_year 6 6128 6168

#> - perceived_e_cig_use 1 6418 6468Stepwise selection keeps most variables in the model and only drops family affluence. In the following output, we can see the AUC for this model has improved to 0.818.

roc_mod_step <- roc(predictor = predict(mod_step, type = "response"),

response = as.factor(mod_step$y),

levels = c(0, 1), direction = "<")

plot(roc_mod_step, print.auc = TRUE, print.thres = TRUE)

If we want to compare this model to our previous one, we could use a likelihood ratio test since the two models are nested. The lrtest() function from the lmtest package allows us to input two nested glm models and performs a corresponding Chi-squared likelihood ratio test. First, we need to ensure that our initial model is fit on the same data used in the stepwise selection. The output indicates a statistically significant improvement in the model likelihood with the inclusion of the other variables.

mod_start2 <- glm(e_cig_use ~ grade + sex + race_and_ethnicity +

location, data = nyts_sub, family = binomial)print(lrtest(mod_start2, mod_step))

#> Likelihood ratio test

#>

#> Model 1: e_cig_use ~ grade + sex + race_and_ethnicity + location

#> Model 2: e_cig_use ~ location + sex + grade + otherlang + grades_in_past_year +

#> perceived_e_cig_use + race_and_ethnicity + LGBT + psych_distress

#> #Df LogLik Df Chisq Pr(>Chisq)

#> 1 13 -3369

#> 2 26 -3019 13 701 <2e-16 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 114.4 Extending Beyond Binary Outcomes

The glm() function can be used to fit models for other possible families and non-binary outcomes. For example, we can fit models where the outcome might follow a Poisson distribution or negative binomial distribution by updating the family argument. In the following code, we fit a Poisson model to model the number of e-cigarettes used in the last 30 days by setting family = poisson. However, despite our outcome being a count value, this model does not appear to be a good fit for our data.

mod_poisson <- glm(num_e_cigs ~ grade + sex + race_and_ethnicity +

location, data = nyts, family = poisson)par(mfrow=c(1,2))

hist(predict(mod_poisson, type = "response"), main = "Model",

xlab = "Predicted Values")

hist(nyts$num_e_cigs, main = "Observed", xlab = "Number E-Cigs")

14.5 Recap Video

14.6 Exercises

Create a new variable

tobacco_userepresenting any tobacco use in the past 30 days (including e-cigarettes, cigarettes, and/or cigars), as well as a new variableperceived_tobacco_useequal to the maximum of the perceived cigarette and e-cigarette use. Then, create a new data framenyts_subthat contains these two new columns as well as columns for sex, grades in the past year, psych distress, and family affluence. Finally, fit a logistic regression model with this new tobacco use variable as the outcome and all other selected variables as independent variables.Perform stepwise selection on your model from Question 1 with

direction = "both", setting the upper scope of the model selection procedure to be a model including all two-way interactions and the lower scope to be a model including only an intercept. To specify all possible interactions, you can use the formula"tobacco_use ~ .^2". Use thetidy()function to display the exponentiated estimated coefficients for the resulting model along with a confidence interval.According to your model from Question 2, what is the estimated probability of tobacco use for a girl with mostly Cs, moderate psych distress, and a perceived tobacco use of 0.5? Use the

predict()function to answer this question.Construct a ROC curve for the model from Question 2 and find the AUC as well as the threshold that maximizes sensitivity and specificity.